Today was the last day of RSA 2024. I have been to four sessions and finished the day with a live concert by Alicia Keys. The sessions weren’t great, it seems as if the least experienced speakers are left for the last day. Alicia Keys made more than up for it however, she was awesome.

The sessions I’ve gone to today were:

- Avoiding Common Design and Security Mistakes in Cloud AI / ML Environments

- To Patch or not to Patch OT – A Risk Management Decision

- Hacking the Mind: How Adversaries use Manipulation and Magic to Mislead

- EXPOSURE: The 5th RSAC SOC Report

Avoiding Common Design and Security Mistakes in Cloud AI / ML Environments

In this session, Natalia Semenova explained how to set up your AI and ML environments, common mistakes people make, and how to prevent them. Although Natalia appears to be very knowledgeable on the topic, her speaking skills are not great, which made the session a bit dull. However, I do think it’s worth taking a look at her slides and reviewing the session if you have the opportunity.

Natalia began with some intriguing points:

- Cloud providers provide guidelines for setting up your environment. However, you’ll need to tailor these to suit your company and the specific use of the environment.

- Companies often only think about the best-case scenario for their AI/ML pipelines. However, it’s crucial to remember that an AI/ML pipeline includes both your code and your data. This means that all code pipeline standards apply, but you also need to protect your training data!

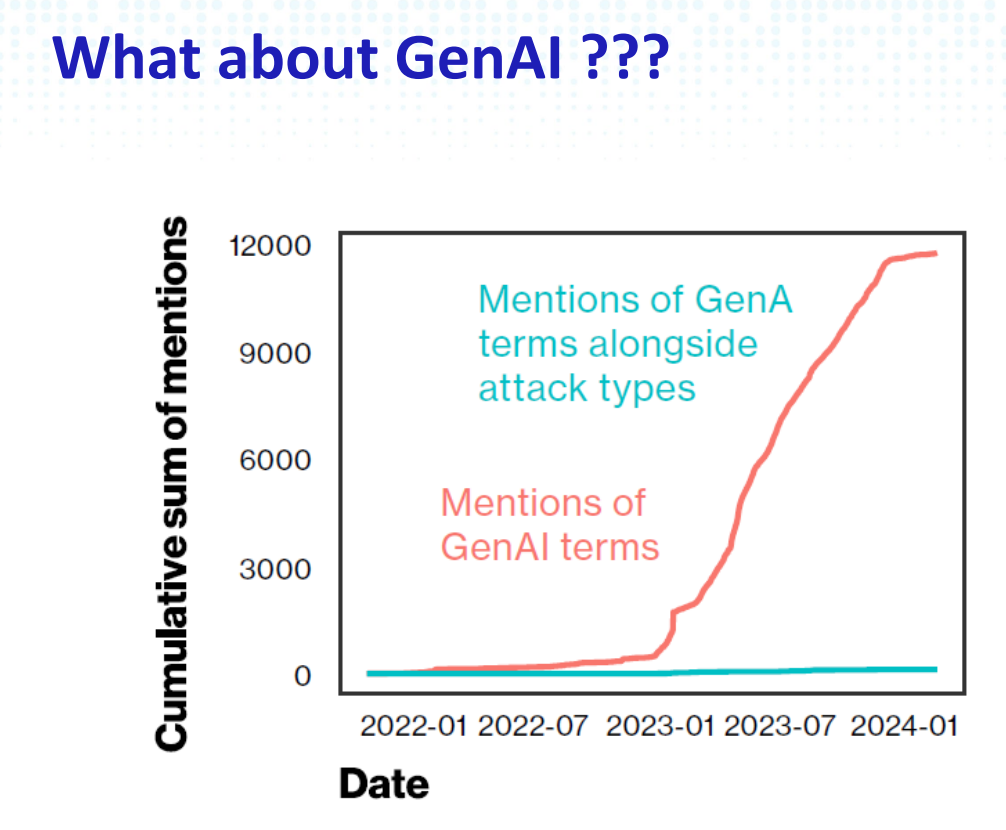

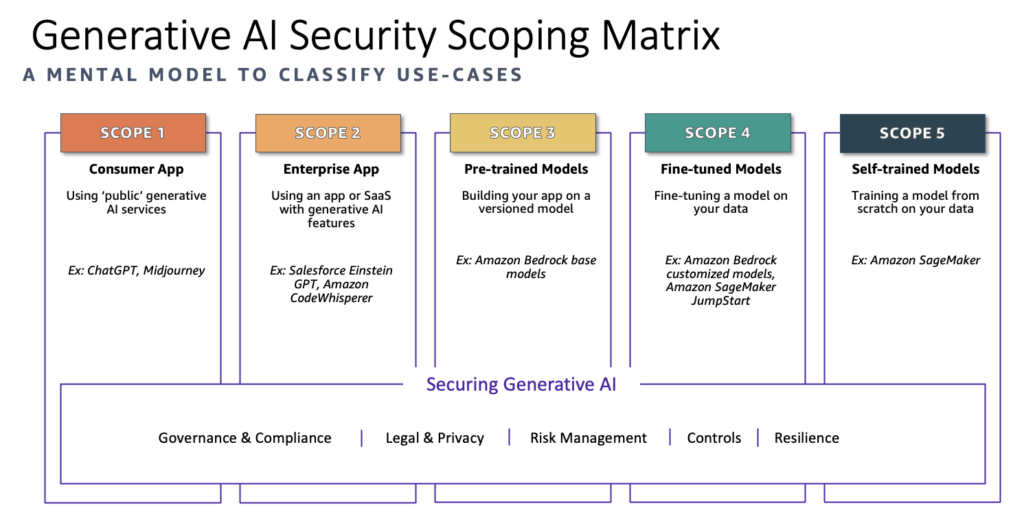

Types of GenAI usage

Natalia used the Generative AI Security Scoping Matrix by AWS. This model defines the same scopes as the Forrester analyst in her presentation about DLP used on day one of RSA.

From scope 1 to 5 what you as a company can influence increases, but remember: with great power comes great responsibility.

Define what you control

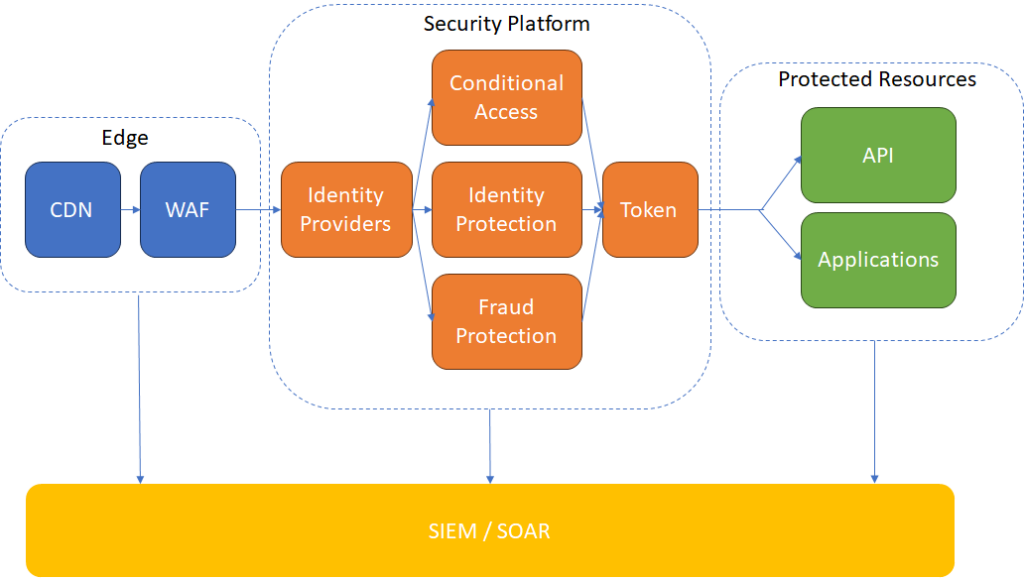

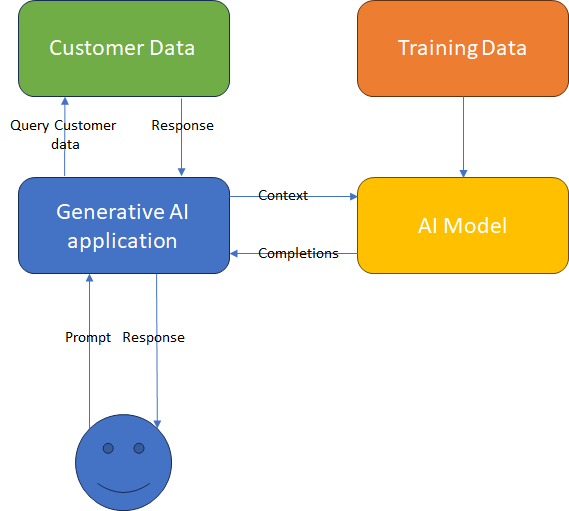

The diagram above gives a broad view of how an AI application might look, regardless of its scope. Depending on the scope, you will have control over specific blocks and data flows. For example, in Scope 5, which involves an in-house developed AI model, all blocks and flows are within your control. However, for a pretrained model, you won’t have control over the training data (as the model was already trained) and the AI model itself, but you will have control over all other flows and blocks in the diagram. Ultimately, you should strive to implement security controls on every block and flow.

Critical components to secure

The presentation mentioned six critical components to secure:

- Identity and Access Management

Restrict permissions for Jupyter notebooks (previously running under the equivalent of a root account). Organize data into buckets based on usage patterns or departments. Provide access to these buckets on a need-to-know basis. Finally, establish a pre-provisioned environment for the quick and secure deployment of new models and experiments. - ML Models

Treat an ML model like any other algorithm. Store the code and data in a private, secure Git repository, conduct code scanning, ensure that containers originate from a trusted repository, utilize prebuilt containers, and encrypt the containers at rest. - Software composition

Review software packages, and make sure they come from a repository with approved software packages. Review privacy policies of packages and opt-out of data collecting services - Licensing

Evaluate the licenses for packages and software you are using. - Compute resources

Enable encryption in transit, enable internode encryption and set limits on resource usage. - (Training) Data

Use only trusted data sources for training data. Verify if the intended data can actually be used or if consent is necessary. Have a plan for data subjects that revoke their consent at a later stage. Tag data and buckets that contain sensitive data.

Logging and Monitoring

In the slide deck, Natalia presents an example architecture for monitoring in both AWS and Azure environments. She recommends collaborating with your data scientists to assist in setting up effective monitoring rules. Key considerations for monitoring include:

- Endpoint

- Model accuracy over time

- Model biases

- Data drift

To Patch or not to Patch – A Risk Management Decision

Ahmik Hindman from Rockwell Automation, a US-based OT manufacturer, presented a strategy for implementing a patch management program in Operational Technology (OT) environments. Last year, he delivered a presentation on securing OT, and this presentation served as a continuation of that topic.

I found this presentation very valuable, as Ahmik demonstrated extensive experience with OT and highlighted specific challenges relevant to this domain. It became evident from the talk that a generic patching strategy cannot be applied to OT systems.

Understand compliance and standards

Some industries, like energy, oil and gas, are part of critical infrastructure and have industry specific compliance standards that prescribe your OT patching standards. For other industries you can have a look at NIST SP 800-82 Rev. 3, a guideline on how to secure and patch your OT environment.

Detailed install base

Before initiating the patching process, it is essential to have a clear understanding of the environment. In the case of OT, an OT-specific scanning tool is necessary, as standard tools may struggle to accurately interpret firmware versions, hardware manufacturers, and may generate numerous false positives.

Furthermore, a comprehensive scan is required to identify the backplane networks, which are typically managed from the PLC and do not utilize the TCP/IP stack.

Initial vulnerability prioritization

Get an OT specific tool that can correlate your OT inventory with the CVE database.

Tailor CVEs to the application

Use CVSS scores and tweak the scores with the CVSS calculator to your environment. Based on controls and specific apps this can drastically change the scoring and urgency of certain patches.

Prioritize critical CVEs

Begin with legacy or obsolete Industrial Automation and Control Systems (IACS) lacking security controls. Then, address your critical assets and vulnerabilities (CVEs) that are actively exploited or have exploit kits readily available.

Utilize SSVC process

The CISA has published an automated SSVC Decision Tree, which Ahmik advises using for making decisions regarding vulnerabilities. Following the decision tree can assign one of the following four statuses to your vulnerability:

- Track: the vulnerability doesn’t need to be remediated immediately, but the organisation should keep tracking the vulnerability when more information becomes available

- Track*: these vulnerbailities have something that asks for them to be monitored a bit closer

- Attend: these vulnerabilities need immediate attention and should be remediated with priority

- Act: these vulnerabilities need to immediate remediation with the highest priority.

Assemble a Change Management Team

The change management team validates vulnerability prioritization, determining whether a patch is necessary or if other mitigating controls can be deployed. In the event that a patch is deemed necessary, they will verify with the vendor if the patch is included on the vendor’s approved list. This list is crucial, as vendors only support specific versions of software to run on their OT devices, meaning that updating to the latest software version may not always be feasible.

The change management team ultimately decides whether to deploy a mitigation or accept the risk.

Testing and validation

Replicate the environment, preferably with hardware. Some vendors do provide software emulators that you can use to verify patches and OT software. Ensure that you create a backup plan, as not all OT devices have a rollback option, making it impossible to revert to an earlier version once a patch is deployed.

Deploy patch / mitigating control

Ensure that you have proper backups of IACS software and configuration so that you can rollback if necessary. Keep in mind that you cannot always rollback a patch with OT devices. Additionally, make sure to only roll out patches during dedicated maintenance windows to minimize impact on the environment.

Documentation and Change Management

Document your latest deployments and make sure your latest configurations and scripts are added to the backups.

Hacking the Mind: How Adversaries use Manipulation and Magic to Mislead

This session was entertaining. The presenter, Robert Willis, is an amateur magician, and the session was more of a magic show. However, it lacked substance, making it less useful from a content perspective. Unfortunately, there are no slides or recordings available for this session.

The premise of this session was that magicians and adversaries use similar techniques to deceive: misdirection, urgency, chaos, etc. The difference is that magicians use these techniques for entertainment, while adversaries use them for malicious purposes.

A few insightful takeaways from this session:

- “Wind your watch”: When faced with urgency, refrain from acting immediately. Take a moment to think before proceeding. This is the most effective way to avoid falling for social engineering tricks.

- “When you look for the gorilla, you’re going to miss other unexpected events”: In other words, when you are focused on one unexpected event, you may overlook other unexpected events.

- Trust is easily exploited and is a vulnerability that cannot be patched. However, you can implement a mitigating control by adopting a “trust but verify” approach.

5th annual RSAC SOC Report

This is the conclusion of the conference presentation by the RSA SOC, which monitors the public WIFI at the conference. They presented their observations compared to previous years and highlighted some insecure practices that are still prevalent, despite RSA being a Cyber Security Conference.

The SOC is sponsored by CISCO, which means that from an architectural perspective, the SOC is equipped with CISCO tools. They use Umbrella to monitor DNS, Netwitness for NDR, Splunk Enterprise Security as SIEM, and CISCO XDR to integrate these tools.

The number of people connecting to the public WIFI and the volume of data transmitted over the network has decreased since the onset of COVID-19. The assumption is that more people are choosing not to connect to open WIFI and are using their own hotspots.

The percentage of SSL traffic has increased from 70% to 80% this year. Interestingly, this percentage was much higher but sharply declined after COVID-19. In comparison to other regions, the US has the highest percentage of unencrypted traffic, followed by the UK, Asia, and then Europe (which is the opposite of what was mentioned by the speaker yesterday, who stated that Europe was less advanced).

Despite the increase in SSL traffic, the SOC still detected 20,000 credentials sent in clear text over the network. These credentials were related to 99 unique accounts.

In terms of operating systems, Apple is the most popular. The most popular chat app since 2023 is Mchat, taking over from WhatsApp.

During the week, they discovered a major vendor leaking a significant amount of data. This issue was previously identified last year, shared with the vendor, who refused to address the data leak and claimed it was working as expected. They refrained from disclosing the vendor’s name due to legal reasons, but it is a major sponsor of RSA and is installed on many people’s PCs.

Other instances of clear text information included the visibility of purchase orders and contracts of a Korean security vendor on the network, reading emails of individuals still using POP3 for email, and finding unrestricted access to someone’s home camera system.

The key takeaways from this session are:

- Use a VPN when connecting to an open network. Many apps do not properly encrypt data, allowing anyone on the network to read the content of your communication.

- Enable the OS firewall.

- Keep your systems patched, preferably before attending the conference.

- Check your configuration settings to ensure they are secure.

Closing Celebration

And that concludes RSA 2024. As a parting note, I leave you all with a 20-second recording of the Closing Celebration of RSA featuring a live concert by Alicia Keys.