Today marked the final day of RSA Conference 2025. The schedule featured sessions only in the morning and early afternoon, concluding with a celebration featuring DJ Irie and Jazz Mafia.

Sessions I Attended Today

The sessions I attended today were:

- The Frugal CISO: Running a Strong Cyber Security on a Budget

- Data-Centric Security: Why Granular is Great

- Lessons Learned From Implementing an Intel-Based Purple Teaming Process

- Hello It’s Me, I’m the User: DBIR Insights on the Use of Stolen Credentials

The Frugal CISO: Running a Strong Cyber Security on a Budget

Anand Thangaraju presented valuable strategies for maximizing security with limited resources. He began with insights from the 2024 Budget Benchmark Report from IANS and Artico Research, which revealed several key findings:

- The rapid growth in security budgets has plateaued

- Organizations typically allocate 11% of their IT budget to cybersecurity

- This percentage varies by industry (higher in finance and healthcare, lower in retail)

- Approximately half of cybersecurity budgets are dedicated to personnel and training—an area Thangaraju advises not to reduce

Eight Budget Optimization Strategies

- Prioritize spending using the 80/20 rule – Focus resources on critical infrastructure and high-impact areas

- Leverage existing tools – Maximize the potential of technologies you already own before purchasing new solutions

- Align with business objectives – Help stakeholders understand risks to gain support for cybersecurity investments

- Automate processes – Implement automation to increase efficiency and reduce costs

- Outsource strategically – Consider outsourcing functions like SOC operations when building in-house capabilities isn’t cost-effective

- Master vendor negotiations – Secure better terms through long-term deals, industry knowledge, pilots, and vendor partnerships. I would recommend to involve your procurement team

- Utilize open source solutions – Consider open source alternatives to reduce licensing costs, while recognizing the maintenance requirements and limitations

- Transfer risk – Implement cyber insurance as part of your risk management strategy

Essential Cybersecurity Investments

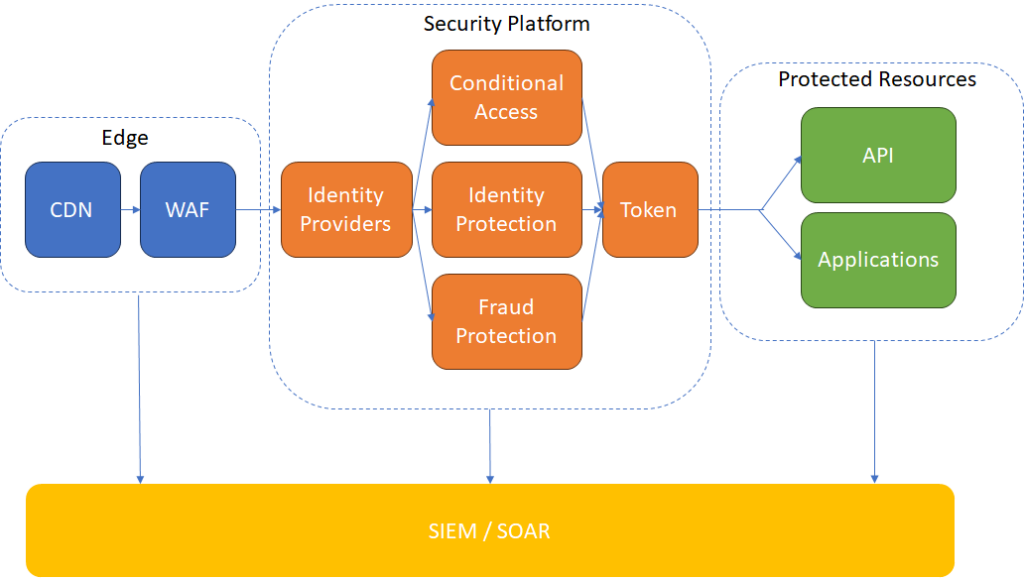

- Identity & Access Management (IAM): MFA, least privilege, SSO

- Endpoint Security: EDR tools, full disk encryption, MDM

- Network Protection: Firewalls, IDS, Zero Trust or VPN, WiFi security

- Email Security: Secure Email Gateway, DMARC/DKIM/SPF implementation, phishing training

- Data Protection: DLP policies, immutable air-gapped backups, encryption in transit and at rest

- Security Monitoring: SIEM implementation, Incident Response Plan, MSSP for 24/7 SOC services

- Vulnerability Management: Asset inventory maintenance, regular vulnerability scanning, automated remediation

- Cloud Security: IAM, workload protection, posture management

- Application Security: WAF, penetration testing, secure SDLC

Unnecessary Investments for Budget-Constrained Organizations

Thangaraju advised avoiding these expenditures when resources are limited:

- In-house SOC

- Premium CTI feeds

- Overly sophisticated tools

- Blockchain security solutions

- Breach and Attack Simulation

- Quantum-resistant cryptography

- Gamified security awareness programs

- AI SOC analysts

- Deception technology

- Premium insider threat tools

While the presentation was somewhat list-heavy with limited time for in-depth explanations, it provided immediately applicable recommendations for practical cybersecurity management.

Data-Centric Security: Why Granular is Great

Will Ackerly and Dana Morris from Virtru explored Attribute Based Access Control (ABAC) and its integration with data classification.

ABAC Explained

ABAC is an authorization framework that evaluates both user attributes and data attributes to dynamically determine access permissions based on predefined policies.

To illustrate this concept, they used a library analogy:

- Data attributes: Book title, category, type

- User attributes: Role, book club membership, grade level

With these attributes, administrators can create dynamic policies such as:

- “Students in grades 11-12 or full-time employees can borrow non-fiction books”

- “Book club members can borrow high-demand books for five days”

ABAC offers operational advantages as well. For example, when a student advances to a new grade, their access permissions automatically update without manual intervention, as the policies apply to the updated attributes.

Trusted Data Format (TDF)

The presenters introduced Trusted Data Format (TDF), a standardized format for applying attributes to data objects. They also discussed OpenTDF, an open-source implementation that serves as a policy enforcement point and facilitates attribute assignment to both data and users.

Overall, this presentation provided a clear explanation of ABAC as an emerging approach to identity and access management.

Lessons Learned From Implementing an Intel-Based Purple Teaming Process

Carlos Gonçalves drew a compelling parallel between automotive safety evolution and cybersecurity. Just as crash tests have dramatically improved vehicle safety over decades, continuous security testing is essential for strengthening cyber defenses.

Gonçalves advocated for a collaborative approach involving red teams, blue teams, and Cyber Threat Intelligence (CTI) teams. The CTI component helps prioritize which techniques to test, since the MITRE ATT&CK framework contains too many techniques to test comprehensively. CTI teams identify techniques that are:

- Most prevalent in actual attacks

- Potential choke points in the network

- Actionable for the organization

Initially, Banco do Brasil (Gonçalves’ organization) encountered challenges with their testing process, as red teams could execute tests much faster than blue teams could develop corresponding detections and mitigations.

The solution came through co-locating all three teams, red team, blue team, and CTI, in the same physical space. This collaborative environment allowed for simultaneous testing and defensive development.

The results were impressive. After implementing this collaborative approach, the organization saw significant productivity gains:

As a next evolution, Banco do Brasil is incorporating their risk management team into the process. This addition helps document and register risks when interesting MITRE techniques aren’t immediately actionable.

Though brief, this presentation effectively illustrated how breaking down team silos can enhance both defensive and offensive security capabilities. The collaborative approach helps blue teams better understand attacker methodologies while giving red teams insight into defensive operations.

Hello It’s Me, I’m the User: DBIR Insights on the Use of Stolen Credentials

Philippe Langlois, a lead data scientist at Verizon and co-author of the Verizon Data Breach Investigations Report (DBIR), presented findings on credential theft and misuse.

Despite a slight decrease in credential-based attacks this year, they remain the primary attack vector.

Credential Theft Methods

InfoStealer malware represents one of the most common credential theft mechanisms. Often distributed through Malware-as-a-Service (MaaS) models, these tools reach victims through:

- Malvertising

- Search engine optimization manipulation

- Phishing campaigns

- Secondary infections from other malware

These InfoStealers collect comprehensive data from compromised devices, including:

- Passwords

- Browser cookies

- Files of interest

- System information that enables device impersonation

A concerning finding revealed that 46% of compromised devices were personal devices containing corporate credentials, placing these breaches outside organizational visibility.

Credential Distribution Channels

Stolen credentials flow through various distribution channels, including marketplaces and Telegram groups. Langlois presented data showing that different credential types (banking, email, etc.) are distributed relatively evenly across these channels.

Credential buyers typically test the validity of purchased credentials and compile “combo lists” for password stuffing attacks, enabling initial access to target organizations.

This presentation underscored the ongoing threat of credential theft and highlighted the challenge of protecting credentials used on personal devices outside corporate security controls.